DeepSeek's Reasoning Memotypes: Could Linguistic Patterns Be Replicating Through Synthetic Data?

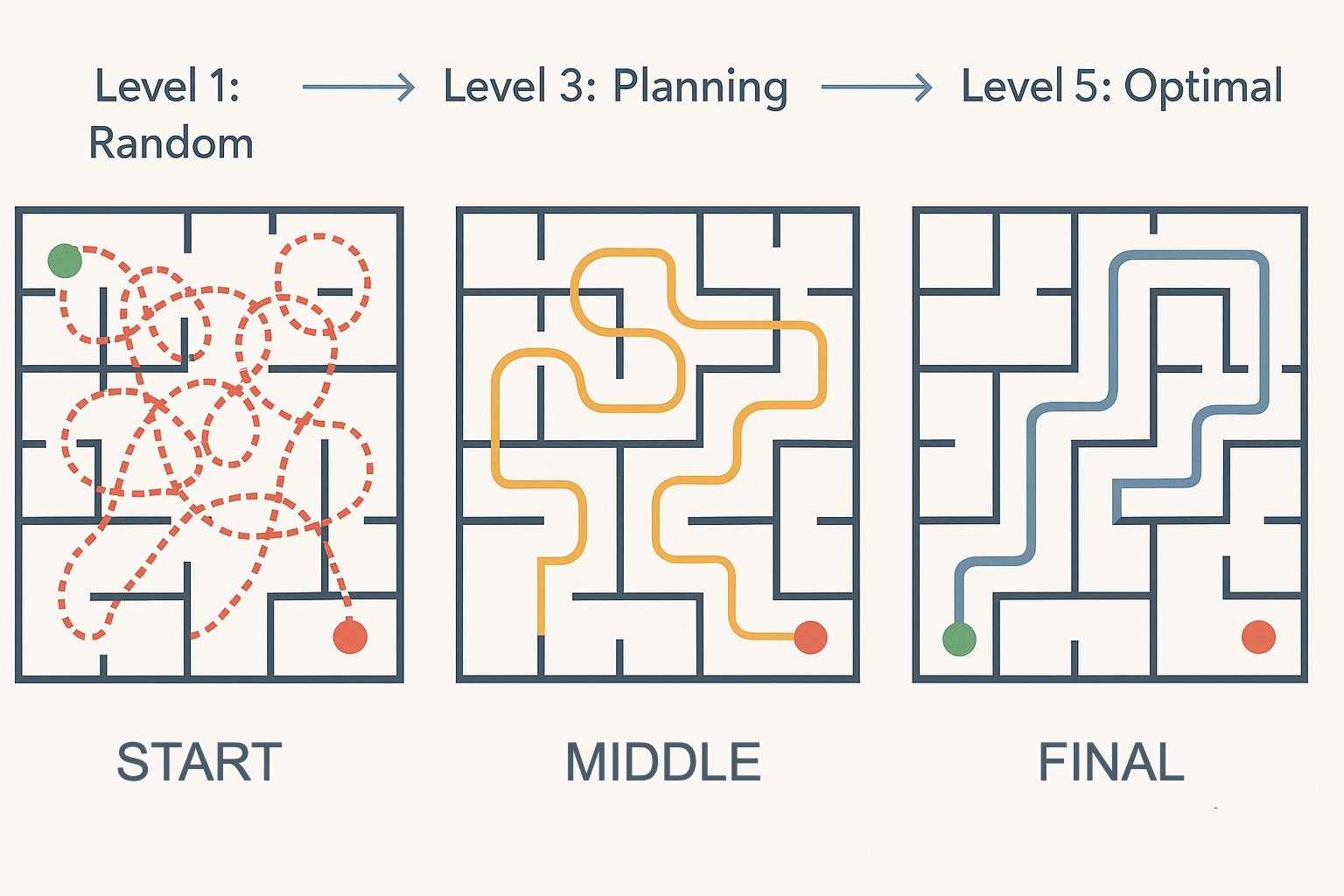

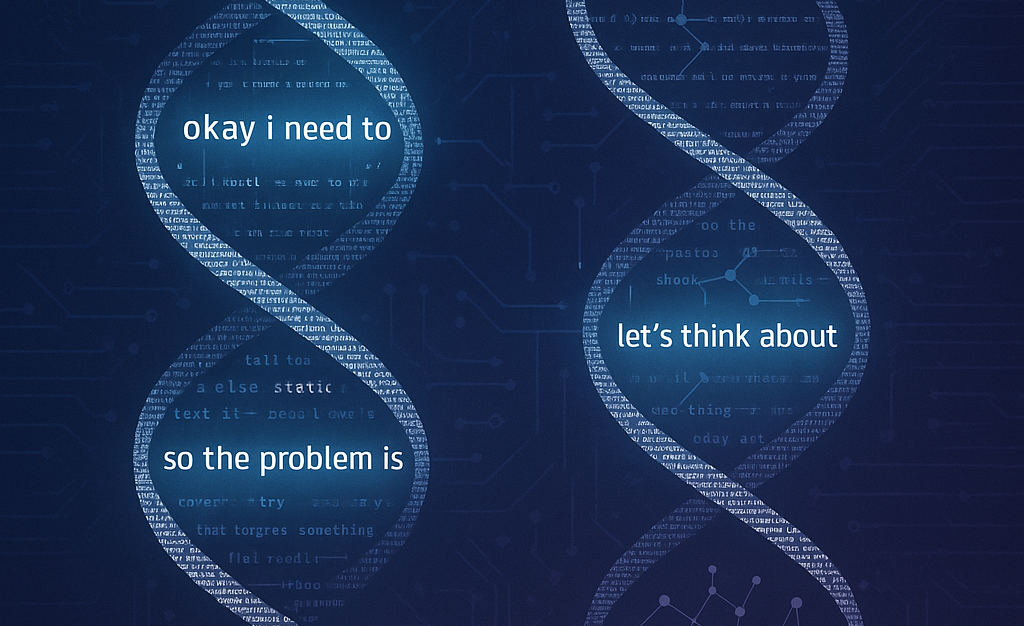

Analysis Code: GitHub Gist Introduction In biology, DNA provides the blueprint for how organisms develop and reproduce. In the realm of synthetic reasoning data, we observe a similar phenomenon: specific linguistic patterns that function as “reasoning memotypes”—self-replicating units of thought structure that propagate through synthetic data generation. Analysis of Nvidia’s Nemotron post-training dataset^[1], which contains over 30 million synthetic examples generated using DeepSeek R1^[2] and other reasoning models, reveals systematic linguistic patterns that appear with extraordinary frequency. These patterns function like genetic code for reasoning behavior, encoding not just what models think, but how they structure and express thought itself. ...